To scale AI sustainably, enterprises must evolve beyond pilots and into a governed, subscription-driven operating model—one that links usage to economics, policy, and performance. Subscription platforms are emerging as the core infrastructure that transforms AI from experimental projects into durable enterprise capabilities. The organizations that master this model will unlock predictable growth, controlled risk, and measurable AI ROI.

Enterprise leaders are discovering a hard truth: AI adoption is easy. AI sustainability is hard.

It’s easy to roll out a copilot, run pilots on a foundation model, and get early wins. It’s much harder to scale AI across hundreds of products, thousands of users, and regulated data—without turning AI into an unpredictable cost center or a governance nightmare.

The constraint isn’t only model quality. It’s the economics and operating model behind the AI stack.

Over the last decade, subscription platforms transformed how enterprises consume cloud, software, and infrastructure—making elastic growth possible through metering, entitlements, forecasting, and governance. Now the same shift is happening in AI. Subscription platforms are becoming foundational to the AI economy because they provide the mechanism enterprises need to scale responsibly: they convert upfront risk into governed consumption, make unit economics visible, and embed controls without slowing innovation.

This article introduces a practical framework for enterprise AI scale: a five-layer AI stack, and the “AI Monetization Plane” that enables it.

1) The AI economy is a five-layer stack

A useful way to understand AI industrialization is as a five-layer ecosystem:

- Energy — electricity, grid capacity, cooling

- Chips — GPUs/accelerators, high-performance networking

- Infrastructure — data centers/cloud, storage, orchestration, observability

- Models — foundation models, fine-tunes, embeddings, safety systems

- Applications — copilots, workflows, vertical AI, agents

Most organizations focus on layers 4 and 5 because that’s where value is visible. But scaling failures typically originate in layers 1–3 where constraints are physical and financial—then compound upward into governance, reliability, and customer trust.

When AI is small, costs are “absorbed.” When AI scales, three realities show up fast:

- AI costs are multi-dimensional (tokens, GPU time, retrieval, network, safety).

- Demand is spiky and uncertain (today’s experiment becomes tomorrow’s enterprise dependency).

- Governance becomes non-negotiable (privacy, residency, audit trails, policy enforcement).

This is why the next wave of enterprise AI won’t be won by the best demo. It will be won by the best operating model for scale.

2) The sustainability problem: AI value is real, but the cost curve is slippery

AI pilots often look inexpensive until they hit production complexity:

- The model call isn’t the only cost; retrieval pipelines, vector stores, observability, and safety layers add up.

- Latency and uptime expectations rise once workflows become business critical.

- Security and compliance controls must be consistent across teams, vendors, and regions.

- Shadow AI emerges when approved paths are slow or unclear.

As AI adoption grows, leadership needs consistent answers to five questions:

- What does it cost to deliver this AI capability (fully loaded)?

- Who is consuming it, and at what rate?

- What guardrails limit risk and runaway spend?

- How do we fund growth without big upfront bets?

- How do we tie spend to business outcomes?

“The next frontier in AI won’t be defined by bigger models—it will be defined by the operating models that make them sustainable. Subscriptions are the bridge between innovation and enterprise-scale reality.”

Piyush Anandani, Director of Innovation – Enterprise Monetization & Subscription Platforms, Hewlett Packard Enterprise

If those questions can’t be answered, AI becomes fragile—politically and financially—even when it’s technically effective.

3) Why subscriptions matter: converting AI risk into AI throughput

Subscriptions are not “billing.” In enterprise AI, they are the mechanism that turns experimental capability into a scalable service. They bring three essential properties.

3.1 Optionality: capex-style commitments become opex-style control

AI demand curves are uncertain. Subscriptions and consumption-based models allow organizations to scale based on validated consumption and outcomes, rather than locking into capacity too early. Optionality is critical because models evolve quickly, and workloads shift as agents, retrieval, and multimodal patterns mature.

3.2 Unit economics: visibility enables optimization

At scale, “AI spend” cannot remain a shared overhead. Subscriptions introduce metering and attribution so you can measure:

- cost per contract summarized

- cost per invoice processed

- cost per developer feature shipped

Once unit economics exist, optimization becomes systematic: routing requests to smaller models, caching responses, batching inference, reducing retrieval calls, improving prompts, enforcing quotas, and targeting premium tiers where ROI is proven.

3.3 Governance at speed: controls without slowing innovation

Enterprises often swing between two extremes: lock down AI and stall adoption, or open access and suffer compliance/cost fallout. Subscription platforms enable the middle path through policy-aware entitlements—who can use what model, what data class, what limits, and what residency rules.

4) The “AI Monetization Plane”: the missing enterprise layer

To scale AI like an enterprise capability, you need a layer that connects usage to governance and economics. I call this the AI Monetization Plane—the control plane that makes AI measurable, governable, and financially scalable.

Diagram 1 (conceptual): AI Stack Monetization Framework

Consider adding horizontal layers of identity and entitlements, metering, rating, pricing, billing and AI FI-ops. This is what makes AI behave like a product and not a science project.

5) How subscriptions scale each of the five layers (practical patterns)

Layer 1: Energy — treat power like a governed utility, not invisible overhead

AI growth turns energy into a first-class constraint. Subscription thinking here means moving from static assumptions to consumption-aligned planning:

- power-aware scheduling for non-urgent workloads

- internal incentives that reward teams to reduce waste

- operational “budgets” tied to energy/cooling constraints

Mini-vignette: A team scaling batch inference sees cost spikes at peak hours. By shifting non-urgent processing to lower-demand windows and enforcing workload quotas, they reduce spend volatility without reducing output.

Layer 2: Chips — make GPU capacity a product, not a political bottleneck

GPU scarcity becomes a governance and prioritization issue as much as a technical one. Subscription patterns include:

- reserved capacity tiers for predictable workloads

- burst pools for peaks and experimentation

- metered GPU-hours with show back/chargeback

- premium SLAs for latency-sensitive business flows

Mini-vignette: Engineering and data science compete for the same GPU cluster. With metered GPU-hours and tenant quotas, teams align to utilization goals. Waste becomes visible, and priority flows are protected by policy.

Layer 3: Infrastructure — build an AI platform that behaves like cloud

The cloud scaled because it standardized consumption, metering, and controls. AI platforms need the same:

- tenant model (apps/teams as tenants)

- dev/test/prod tiers with policies

- standardized usage events across pipelines

- AI FinOps dashboards for forecasting and anomaly detection

Mini-vignette: A company launches multiple copilots. Without standard telemetry, costs are blamed on “the platform.” With common usage events and cost attribution by tenant, leaders can invest rationally—scaling winners and retiring low-ROI experiments.

Layer 4: Models — productize models with measurable throughput and trust

Token-based pricing is only the beginning. Enterprises need richer dimensions:

- latency tiering (real-time vs batch)

- context size and retrieval costs

- safety controls and audit requirements

- data residency and compliance posture

Mini-vignette: A regulated workflow requires stronger filtering and audit logs. The enterprise offers a premium compliance tier with higher cost-to-serve but clear value—preventing shadow AI while enabling adoption.

Layer 5: Applications — align subscriptions to outcomes

This is where ROI lives. The best packaging is often hybrid:

- baseline subscription for access

- metered usage for heavy consumers

- transaction pricing for workflow automation

- outcome-linked commercial models where feasible

Mini-vignette: A service team uses an AI agent to draft responses and retrieve knowledge. Pricing the capability per case resolved aligns spend with value and improves adoption because business owners can justify the investment in operational terms.

6) A simple unit economics model you can operationalize

To make AI scalable, you need a repeatable way to calculate cost-to-serve. A practical model:

Cost per AI Transaction = Model Cost (tokens in/out × rate)

- Retrieval Cost (vector queries, embeddings, storage reads)

- Orchestration Cost (workflow engine, tools, agent steps)

- Governance Cost (policy checks, safety filters, logging, review)

- Overhead (observability, incident response, platform support)

Now convert that into business language:

Value per Transaction could be minutes saved, defects reduced, conversion uplift, or cycle-time reduction. When cost and value are measurable, packaging becomes rational:

- Standard tier: low governance overhead, broad access

- Premium tier: higher controls, stronger SLA, higher cost-to-serve

- Outcome tier: price tied to measurable KPI lift

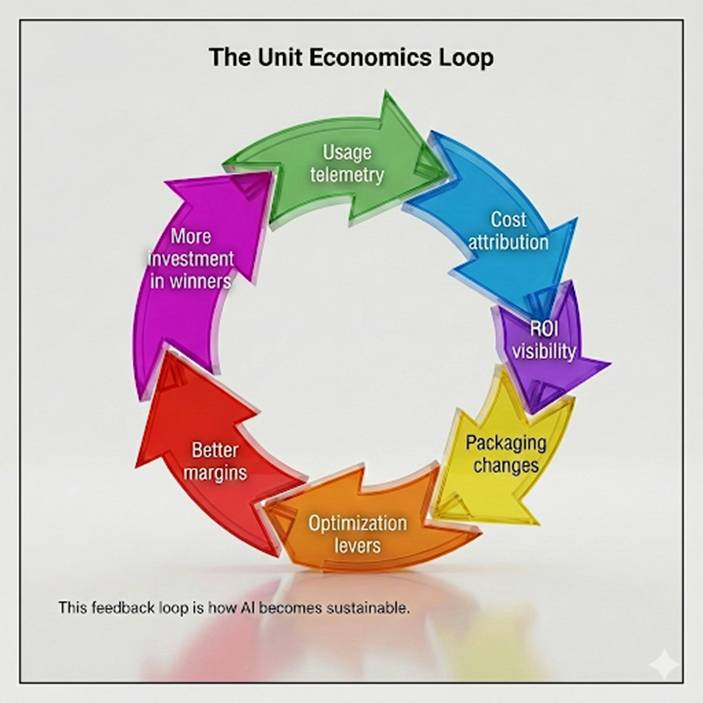

Diagram 2 (conceptual): The Unit Economics Loop

7) What changes when subscription platforms govern AI

Organizations that implement the Monetization Plane typically unlock five outcomes:

- Faster adoption with fewer budget fights — start small, scale predictably

- Cost transparency and accountability — unit economics become defensible

- Higher utilization of scarce resources — GPUs and services allocated by measurable demand

- Reduced compliance and security risk — entitlements, audit trails, and policies are built-in

- A durable path to monetization — AI features become repeatable revenue (or disciplined internal services)

In short: subscriptions don’t just help you “charge for AI.” They help you run AI as an enterprise product.

Conclusion: the next winner will engineer economics, not just models

The AI economy is scaling across energy, chips, infrastructure, models, and applications. But enterprise-scale success depends on sustainability—cost control, governance, and value measurement—not just technical performance.

That’s why subscription platforms are becoming foundational to enterprise AI. They provide the operating model enterprises that need to scale with confidence: governed consumption, visible unit economics, and policy-aware controls. Organizations that build this layer early will scale faster, with less risk, and with a clearer path from innovation to durable business value.

Author bio:

Piyush is a Director of Innovation leading enterprise-scale transformation programs for a major technology firm, focused on subscription platforms, monetization architecture, and AI operating models. His work centers on building scalable, governed, and economically sustainable foundations for enterprise adoption of subscription models in Technology and Media firms.